To do this, we first take a picture of a checkerboard pattern known as Tsai Grid.

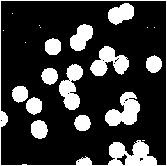

Using SciLab's locate function, we selected 25 points on this image to get the pixel value of these points. We also take note of the real world coordinates of these points by letting the left side of the board to be the x-axis, the right side as the y-axis and the vertical as the z-axis. Each square has a side length of one inch. The origin is shown in pink below.

The green dots are the 25 points we've selected for our calibration. Next, we set up the matrix Q shown by equation below for values of i from 1 to 25. (i stands for image coordinate, o for real world object coordinate)

We then solve for a using equation below.

The resulting values of matrix a can then be used in the following equations to get the resulting 2D image coordinate by knowing the real world coordinates of the point. (a_34 is set to 1)

We then solve for a using equation below.

The resulting values of matrix a can then be used in the following equations to get the resulting 2D image coordinate by knowing the real world coordinates of the point. (a_34 is set to 1)

Implementing this method:

Real world coordinates of the green dots:

1. (8,0,12)

2. (6,0,10)

3. (2,0,10)

4. (4,0,9)

5. (6,0,8)

6. (6,0,3)

7. (4,0,2)

8. (2,0,3)

9. (6,0,5)

10. (4,0,3)

11. (0,8,12)

12. (0,5,10)

13. (0,2,10)

14. (0,5,8)

15. (0,3,7)

16. (0,5,4)

17. (0,7,2)

18. (0,2,1)

19. (0,3,3)

20. (0,5,1)

21. (0,0,1)

22. (0,0,3)

23. (0,0,5)

24. (0,0,6)

25. (0,0,11)

Corresponding Image Coordinates (Pixel Value)

Resulting matrix a

New image coordinates after using the matrix a

Getting the difference between these computed values with the actual values gives the following mean values for each coordinate:

y: 0.474705

z: 1.365954

-o0o-

Collaborator: Cole Fabros

-o0o-

Grade: 10/10 since I implemented the camera calibration well, and that the mean difference for each axis is within one pixel. :)

1. (8,0,12)

2. (6,0,10)

3. (2,0,10)

4. (4,0,9)

5. (6,0,8)

6. (6,0,3)

7. (4,0,2)

8. (2,0,3)

9. (6,0,5)

10. (4,0,3)

11. (0,8,12)

12. (0,5,10)

13. (0,2,10)

14. (0,5,8)

15. (0,3,7)

16. (0,5,4)

17. (0,7,2)

18. (0,2,1)

19. (0,3,3)

20. (0,5,1)

21. (0,0,1)

22. (0,0,3)

23. (0,0,5)

24. (0,0,6)

25. (0,0,11)

Corresponding Image Coordinates (Pixel Value)

| point | y_image | z_image |

| 1 | 23.214286 | 235.11905 |

| 2 | 53.571429 | 200 |

| 3 | 108.33333 | 205.35714 |

| 4 | 82.738095 | 185.11905 |

| 5 | 54.761905 | 163.09524 |

| 6 | 57.738095 | 73.214286 |

| 7 | 85.714286 | 63.095238 |

| 8 | 110.11905 | 86.904762 |

| 9 | 55.952381 | 108.92857 |

| 10 | 85.119048 | 80.357143 |

| 11 | 246.42857 | 236.90476 |

| 12 | 201.19048 | 202.38095 |

| 13 | 159.52381 | 205.95238 |

| 14 | 201.19048 | 166.66667 |

| 15 | 172.61905 | 152.97619 |

| 16 | 199.40476 | 80.357143 |

| 17 | 227.97619 | 55.952381 |

| 18 | 159.52381 | 55.357143 |

| 19 | 172.02381 | 85.119048 |

| 20 | 198.80952 | 45.238095 |

| 21 | 133.92857 | 60.714286 |

| 22 | 134.52381 | 92.261905 |

| 23 | 133.92857 | 125 |

| 24 | 133.33333 | 141.07143 |

| 25 | 132.7381 | 224.40476 |

Resulting matrix a

| -13.2561 |

| 9.736856 |

| -0.88994 |

| 134.7117 |

| -4.07642 |

| -4.2728 |

| 15.07773 |

| 45.71427 |

| -0.01371 |

| -0.01504 |

| -0.00559 |

New image coordinates after using the matrix a

| point | y_new | z_new |

| 1 | 21.842763 | 235.67576 |

| 2 | 53.69017 | 199.59722 |

| 3 | 108.32033 | 205.44708 |

| 4 | 82.330771 | 184.49906 |

| 5 | 55.041758 | 162.50365 |

| 6 | 58.274085 | 73.794279 |

| 7 | 85.553674 | 63.772888 |

| 8 | 110.40641 | 86.620738 |

| 9 | 57.005504 | 108.60976 |

| 10 | 85.109881 | 80.396867 |

| 11 | 248.48566 | 236.84126 |

| 12 | 200.81792 | 201.5441 |

| 13 | 158.94669 | 205.61779 |

| 14 | 200.29104 | 164.72204 |

| 15 | 172.19655 | 151.17308 |

| 16 | 199.27642 | 93.813046 |

| 17 | 227.597 | 52.018523 |

| 18 | 158.96577 | 54.178965 |

| 19 | 171.88968 | 83.28296 |

| 20 | 198.54783 | 42.893636 |

| 21 | 134.57351 | 61.133497 |

| 22 | 134.29241 | 92.497568 |

| 23 | 134.00484 | 124.58259 |

| 24 | 133.85857 | 140.90327 |

| 25 | 133.10108 | 225.42079 |

Getting the difference between these computed values with the actual values gives the following mean values for each coordinate:

y: 0.474705

z: 1.365954

-o0o-

Collaborator: Cole Fabros

-o0o-

Grade: 10/10 since I implemented the camera calibration well, and that the mean difference for each axis is within one pixel. :)